Blogs

Understanding the SAT: Computer-Adaptive Testing, Item Response Theory, and Smart Strategies for Success

By Dr. Mercy

The SAT has long been a key part of the college admissions journey, but recent changes—especially with the move to the Digital SAT—mean that students and parents need to understand more than just reading, writing, and math. One of the most important shifts is the adoption of Computer-Adaptive Testing (CAT). This post explains how CAT works, the science behind it (called Item Response Theory), what scored and unscored questions mean, and how students can prepare effectively.

What is Computer-Adaptive Testing (CAT) in the SAT?

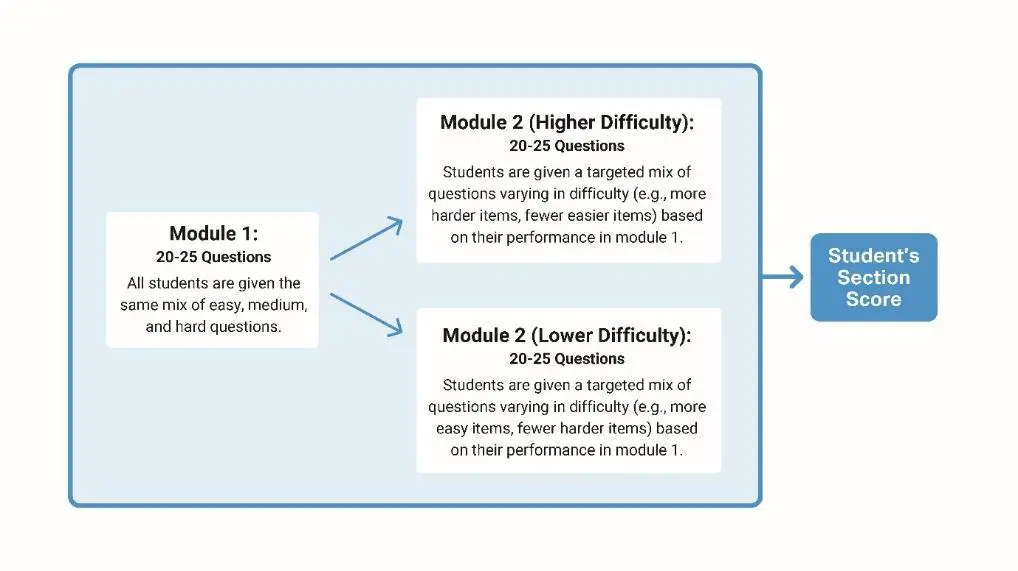

As of 2024, the SAT transitioned to a digital, section-level adaptive format. Unlike the traditional version,

where all students answered the same set of questions, the Digital SAT adjusts the difficulty of the second

module in each section—Reading and Writing, and Math—based on a student’s performance in the first. This means the better a student does in the first module, the more challenging the second module becomes.

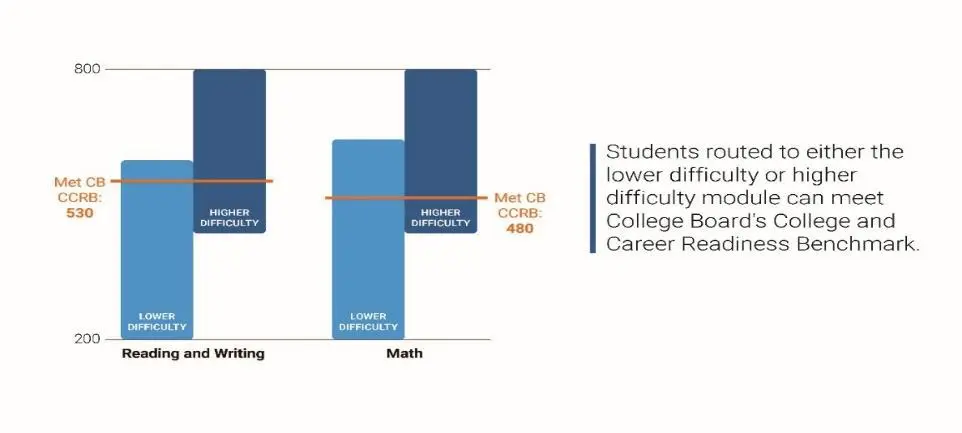

Each section continues to be scored on a 200–800 scale, with a total score ranging from 400 to 1600. These scores help determine a student’s readiness for college-level work. In particular, benchmarks like a 480 in Reading and Writing and a 530 in Math are commonly used to indicate college readiness. Beyond the main score, the test also provides subscores that break down performance in areas like vocabulary, expression of ideas, problem-solving, and data analysis, offering a more nuanced picture of a student’s strengths and areas for growth.

The test itself is more concise, clocking in at around two hours and 14 minutes. Features such as a built-in

calculator for all Math questions, question flagging for review, a countdown timer, and an on-screen reference sheet enhance the test-taking experience. The adaptive nature of the Digital SAT allows it to remain rigorous while being shorter and more efficient than its predecessor.

Understanding Item Response Theory (IRT)

Item Response Theory is the statistical backbone of adaptive assessments like the Digital SAT. Unlike

traditional scoring models that simply count correct answers, IRT takes a deeper look at how each response reflects a test taker’s ability. It evaluates responses using a model that estimates the likelihood of a student answering a question correctly based on their ability level and the properties of the question itself.

IRT relies on a model known as the Three-Parameter Logistic Model (3PL), which includes difficulty, discrimination, and guessing. Difficulty measures how challenging a question is—questions that only high-achieving students tend to get right have higher difficulty ratings. Discrimination refers to how well a question distinguishes between stronger and weaker students. Finally, the guessing parameter accounts for the chance that a student may get a question right simply by guessing, especially in multiple-choice formats.

This approach provides a more refined estimate of a student’s true ability. Two students could answer the same number of questions correctly, but receive different scores if one successfully tackled more difficult questions. While this may seem counterintuitive, it aligns better with actual ability and potential.

The College Board, while not releasing specific question weights, has indicated that IRT and real-time ability estimation play a critical role in the scoring system. This method allows scores to be calculated more precisely and quickly, even if students answer different sets of questions due to the test’s adaptive nature.

Why IRT Offers an Advantage in Adaptive Testing

IRT enhances fairness and accuracy in testing. Evaluating not just the number of correct answers but also the quality and difficulty of those questions, it provides a more meaningful measure of student ability. It also allows the test to be shorter without sacrificing accuracy. For example, after completing the first module of a section, a student’s responses are analyzed using IRT to estimate ability. The second module is then tailored to challenge the student appropriately.

Consider a scenario where two students—let’s call them Sami and Antonio—get different numbers of questions correct. While Sami answers more questions correctly, Antonio ends up with a higher estimated ability score because he successfully navigated more difficult questions. This illustrates how traditional scoring can be misleading and why adaptive models are considered more valid and reliable.

Scored vs. Unscored Questions: What You Should Know

The Digital SAT includes both scored and unscored questions. The scored items contribute directly to your final section and overall scores, reflecting core skills in reading, writing, and math. These questions are chosen and weighted using adaptive testing principles, meaning performance directly influences the difficulty of subsequent questions and ultimately, the score.

Unscored questions, meanwhile, are included for research and development purposes. They help the College Board evaluate potential future test items. Importantly, these questions are indistinguishable from the scored ones, so students should treat every question with equal care and attention. Skipping or dismissing any question could lead to a lower score if that item turns out to be scored.

Smart Strategies for Taking an Adaptive Test

Success on the adaptive Digital SAT isn’t just about content knowledge—it’s also about strategy. First and

foremost, students should approach every question as if it counts, since there’s no way to know which ones are scored and because performance on early questions affects what comes next.

In the Reading and Writing section, focus on tackling straightforward questions first, such as vocabulary-in-context or basic grammar corrections. More interpretive or inference-based questions can be addressed later. In Math, start with problems you’re confident in solving, especially among the earlier questions, and return to complex multi-step or word problems later. Use tools like flagging questions to revisit them if time allows.

Across all sections, it’s effective to use a “Mark and Return” method—answer what you can confidently,

mark the rest, and revisit later. Additionally, trust your instincts on first attempts unless a glaring mistake

becomes evident upon review.

Finally, after each practice test or section, review mistakes not just by content type but by error type. Did

you miss a question because of a concept misunderstanding, a misread question, or a careless mistake?

Understanding the reason behind each mistake helps you avoid similar errors in the future.

Final Thoughts for Students and Parents

The shift to adaptive testing and IRT-based scoring represents a modern, data-informed evolution in how

student readiness is measured. While it may seem complex at first, this system is designed to be more accurate, more efficient, and ultimately, more fair. With a focused study plan, smart testing strategies, and a clear understanding of how the Digital SAT works, students can turn this innovation into an advantage on their path to college success.